Adding a Site Map and Robots.txt

Adding sitemap.xml and robots.txt that references it to aid SEO.

Required dependencies

We use next-sitemap for the creation of the site map and robots.txt:

# npm i next-sitemap --saveConfiguring the site map

Next sitemap uses a configuration file with the default name next-sitemap.config.mjs:

/** @type {import('next-sitemap').IConfig} */

const siteUrl = process.env.SITE_URL || "https://example.com";

const config = {

siteUrl,

sitemapBaseFileName: "sitemap/sitemap",

changefreq: "weekly",

exclude: ["/cdn-cgi/"],

transform: async (config, path) => {

if (!path.endsWith("/")) {

path += "/";

}

// Use default transformation for all other cases

return {

loc: path, // => this will be exported as http(s)://<config.siteUrl>/<path>

changefreq: config.changefreq,

priority: config.priority,

lastmod: config.autoLastmod ? new Date().toISOString() : undefined,

alternateRefs: config.alternateRefs ?? [],

};

},

generateRobotsTxt: true,

robotsTxtOptions: {

policies: [

{

userAgent: "*",

allow: "/",

disallow: ["/cdn-cgi/"],

},

],

},

};

export default config;The site map and robots.txt is generated in the pre-build step package.json:

{

"scripts": {

...

"prebuild": "next-sitemap --config next-sitemap.config.mjs",

...

}

}In some cases it is also advisable to add the same step to the postbuild script.

When we run the build command we now see the pre-build command creating the site map and robots.txt files executed first:

% npm run build/var/prj/static-nextjs % npm run build

> [email protected] prebuild

> next-sitemap --config next-sitemap.config.mjs

✨ [next-sitemap] Loading next-sitemap config: file:///private/var/prj/static-nextjs/next-sitemap.config.mjs

✅ [next-sitemap] Generation completed

┌───────────────┬────────┐

│ (index) │ Values │

├───────────────┼────────┤

│ indexSitemaps │ 1 │

│ sitemaps │ 1 │

└───────────────┴────────┘

-----------------------------------------------------

SITEMAP INDICES

-----------------------------------------------------

○ https://example.com/sitemap/sitemap.xml

-----------------------------------------------------

SITEMAPS

-----------------------------------------------------

○ https://example.com/sitemap/sitemap-0.xml

> [email protected] build

> next build

▲ Next.js 14.2.4

......The robots.txt and site map files are created in the public directory before the build and are included post build:

...

├── package-lock.json

├── package.json

├── postcss.config.mjs

├── public

│ ├── next.svg

│ ├── robots.txt

│ ├── sitemap

│ │ ├── sitemap-0.xml

│ │ └── sitemap.xml

│ └── vercel.svg

├── src

│ ├── app

│ │ ├── about

│ │ │ └── page.tsx

│ │ ├── favicon.ico

...Removing Host from robots.txt

The content of the robots.txt generated as shown above is like:

# *

User-agent: *

Allow: /

Disallow: /cdn-cgi/

# Host

Host: https://example.com

# Sitemaps

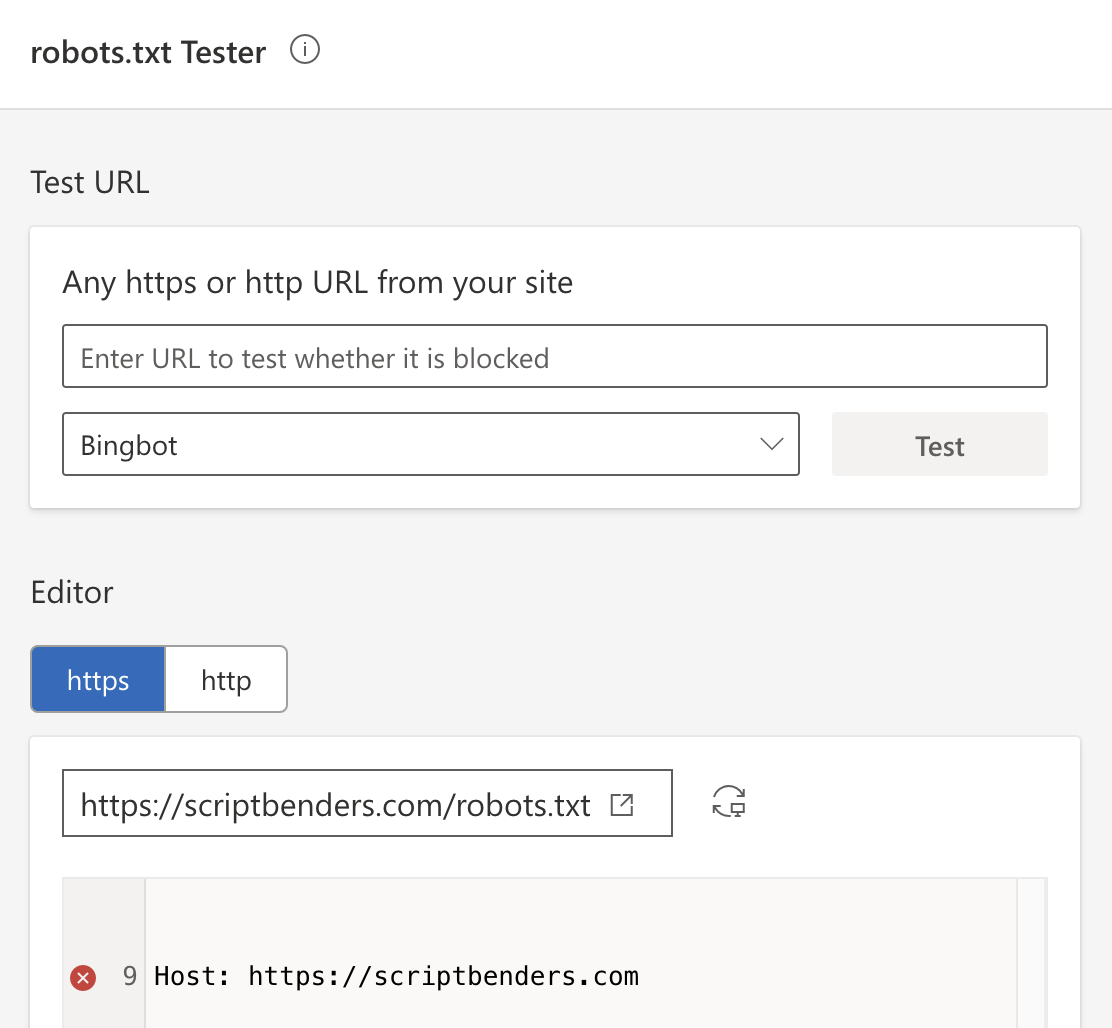

Sitemap: https://example.com/sitemap/sitemap.xmlSome search engines report having the Host entry as a mistake. Here is what Bing Webmaster Tools reports for this website:

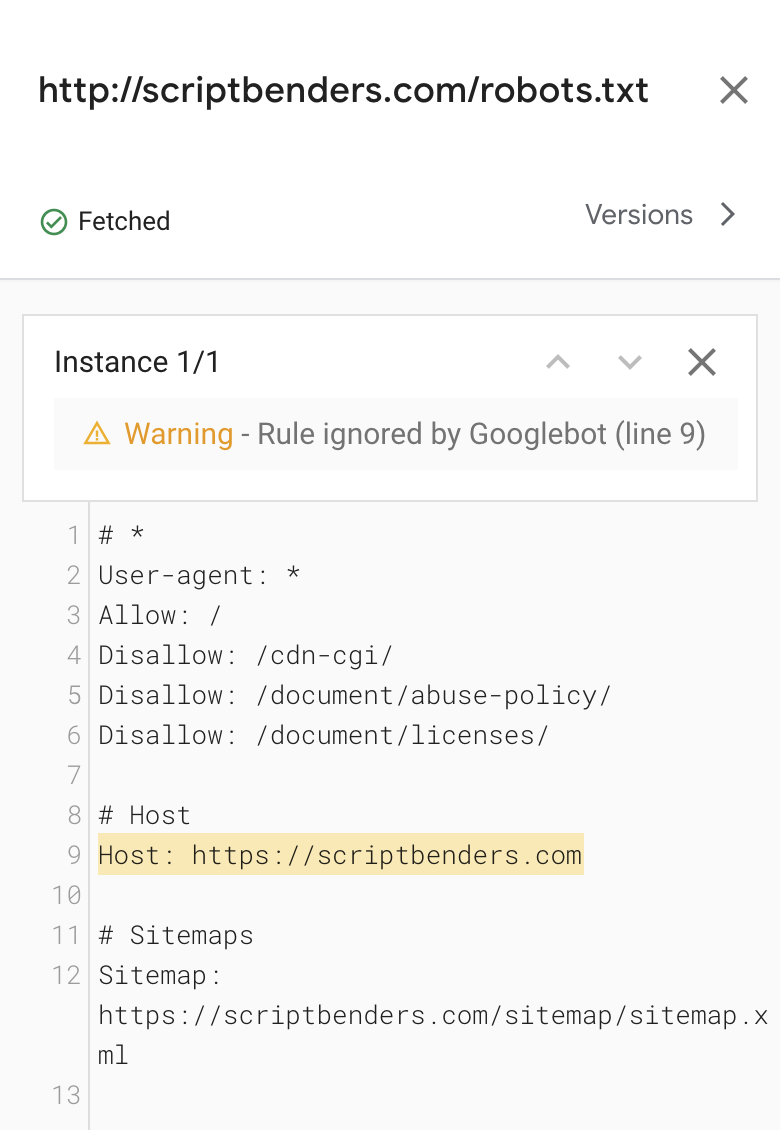

The warning issued by Google Search Console is less dramatic yet similarly disapproving:

As of this writing there is no setting to remove the Host record per se, however there is a workaround proposed on github. We implement it with the following modification to next-sitemap.config.mjs:

...

generateRobotsTxt: true,

robotsTxtOptions: {

transformRobotsTxt: async (_, robotsTxt) => {

const withoutHost = robotsTxt.replace(`# Host\nHost: ${siteUrl}\n\n`, "");

return withoutHost;

},

policies: [

{

...Further reading

The topic of site maps and the larger topic of SEO optimization has many aspects. A good resource for learning about it is the episode Perfect Sitemaps for SEO of the Syntax. podcast.

Another interesting source is the Extensive /robots.txt guide for SEOs which covers, among others:

- Previous: About Page and Scroll to Top

- Next: GDPR Cookie Consent Bar

Last Updated: